#inertial measurement unit

Explore tagged Tumblr posts

Text

Harga Honda Africa Twin CRF1100L Terbaru 2024 Indonesia

Harga Honda Africa Twin CRF1100L Terbaru 2024 Indonesia ., salam pertamax7.com, Harga Honda Africa Twin CRF1100L Terbaru 2024 Indonesia Link ponsel pintar ( di sini ) Salam Moge Mania Ada info resmi dari pulau Jakarta berjudul Honda CRF1100L Africa Twin Terbaru Siap Pikat Petualang Sejati PT Astra Honda Motor (AHM) menghadirkan model terbaru dari CRF1100L Africa Twin dengan desain dan dan…

#2024 Honda Africa Twin CRF1100L#CRF1100L#Harga Honda Africa Twin#Harga Honda Africa Twin CRF1100L#honda africa twin#Honda Africa Twin CRF1100L#inertial measurement unit#Showa Electronically Equipped Ride Adjustment

0 notes

Text

MPU-6050: Features, Specifications & Important Applications

The MPU-6050 is a popular Inertial Measurement Unit (IMU) sensor module that combines a gyroscope and an accelerometer. It is commonly used in various electronic projects, particularly in applications that require motion sensing or orientation tracking.

Features of MPU-6050

The MPU-6050 is a popular Inertial Measurement Unit (IMU) that combines a 3-axis gyroscope and a 3-axis accelerometer in a single chip.

Here are the key features of the MPU-6050:

Gyroscope:

3-Axis Gyroscope: Measures angular velocity around the X, Y, and Z axes. Provides data on how fast the sensor is rotating in degrees per second (°/s).

Accelerometer:

3-Axis Accelerometer: Measures acceleration along the X, Y, and Z axes. Provides information about changes in velocity and the orientation of the sensor concerning the Earth's gravity.

Digital Motion Processor (DMP):

Integrated DMP: The MPU-6050 features a Digital Motion Processor that offloads complex motion processing tasks from the host microcontroller, reducing the computational load on the main system.

Communication Interface:

I2C (Inter-Integrated Circuit): The MPU-6050 communicates with a microcontroller using the I2C protocol, making it easy to interface with a variety of microcontrollers.

Temperature Sensor:

Onboard Temperature Sensor: The sensor includes an integrated temperature sensor, providing information about the ambient temperature.

Programmable Gyroscope and Accelerometer Range:

Configurable Sensitivity: Users can adjust the full-scale range of the gyroscope and accelerometer to suit their specific application requirements.

Low Power Consumption:

Low Power Operation: Designed for low power consumption, making it suitable for battery-powered and energy-efficient applications.

Read More: MPU-6050

#mpu6050#MPU-6050#IMU#accelerometer#gyroscope#magnetometer#6-axis IMU#inertial measurement unit#motion tracking#orientation sensing#navigation#robotics#drones#wearable devices#IoT#consumer electronics#industrial automation#automotive#aerospace#defense#MPU-6050 features#MPU-6050 specifications#MPU-6050 applications#MPU-6050 datasheet#MPU-6050 tutorial#MPU-6050 library#MPU-6050 programming#MPU-6050 projects

0 notes

Text

The global inertial measurement unit market size is calculated at USD 25.44 billion in 2024 and is expected to be worth around USD 48.20 billion by 2033. It is poised to grow at a CAGR of 7.35% from 2024 to 2033.

0 notes

Text

Global defense Inertial Measurement Unit market reports

A device called an Inertial Measurement Unit (IMU) uses accelerometers and gyroscopes to measure and report force, respectively, and angular rate. Defense inertial measurement units monitor a number of important factors, two of which are the specific gravity and the angular rate of an object. It should be noted that a magnetometer, which measures the magnetic field surrounding the system, is an optional part of this arrangement. By combining a magnetometer into a Defence Inertial Unit, which filters algorithms to determine orientation information results in a device, an apparatus known as an Attitude and Heading Reference Systems (ARHS) is constructed.

One inertial sensor may be able to perceive just measurements along or around one axis, according to the general operation of an inertial measurement unit. To get a three-dimensional solution, three different inertial sensors have to be mounted in an orthogonal cluster, or triad. This trio of inertial sensors arranged in a triad is referred to as a 3-axis inertial sensor since each of the three axes may yield a single measurement from the sensors. This kind of inertial system, which provides two independent measures along each of the three axes for a total of six measurements, is called a 6-axis system. It consists of a 3-axis accelerometer and 3-axis gyroscope.

Main elements propelling the market's expansion:

The market for inertial measurement units is expected to rise as a result of the growing use of autonomous cars and advanced driver assistance systems (ADAS) in both the defense and commercial sectors. The demand for MEMS-based IMU technology is increased by the global defense Inertial Measurement Unit market reports ability to display the precise location of the automotive system in real-time.

Trends impacting the market's expansion:

One of the primary drivers of the growth of the defense inertial measurement units market is the growing use of gyroscopes in the defense industry. The instrument is used to both stabilize the angular velocity and measure the precise velocity. The demand for MEMS-based technology, which enables end users in the commercial automobile and defense sectors to get exact information about their surroundings, is driving the overall growth dynamics.

Dynamics of the Market:

The defense inertial measurement unit is expected to have higher research-based expenditure, which would propel market expansion. The expansion of defense inertial measurement units is also expected to be driven by the rising market penetration of unmanned systems. IMUs are currently widely used in Unmanned Aerial Vehicles (UAVs), AGVs, and other robots that need to know their altitude and position in space.

Advancements:

Another area of research using inertial measurement units that is becoming more and more prominent is collaborative robot research. The focus of the study is on the positioning of human workers who collaborate with collaborative robots. The deployment of human workers is required to achieve safe human-robot cooperation. Vision and ranging sensors will be used to determine the workers' positions. On the other hand, IMU can be used to determine both the operator's position and the altitude. Compared to the previous technology, which frequently only recorded the operator's approximate location and direction, the motion capture system based on IMUs can follow the movement of the operator's full body, making it more appropriate for human-robot collaboration.

0 notes

Text

By Ben Coxworth

November 22, 2023

(New Atlas)

[The "robot" is named HEAP (Hydraulic Excavator for an Autonomous Purpose), and it's actually a 12-ton Menzi Muck M545 walking excavator that was modified by a team from the ETH Zurich research institute. Among the modifications were the installation of a GNSS global positioning system, a chassis-mounted IMU (inertial measurement unit), a control module, plus LiDAR sensors in its cabin and on its excavating arm.

For this latest project, HEAP began by scanning a construction site, creating a 3D map of it, then recording the locations of boulders (weighing several tonnes each) that had been dumped at the site. The robot then lifted each boulder off the ground and utilized machine vision technology to estimate its weight and center of gravity, and to record its three-dimensional shape.

An algorithm running on HEAP's control module subsequently determined the best location for each boulder, in order to build a stable 6-meter (20-ft) high, 65-meter (213-ft) long dry-stone wall. "Dry-stone" refers to a wall that is made only of stacked stones without any mortar between them.

HEAP proceeded to build such a wall, placing approximately 20 to 30 boulders per building session. According to the researchers, that's about how many would be delivered in one load, if outside rocks were being used. In fact, one of the main attributes of the experimental system is the fact that it allows locally sourced boulders or other building materials to be used, so energy doesn't have to be wasted bringing them in from other locations.

A paper on the study was recently published in the journal Science Robotics. You can see HEAP in boulder-stacking action, in the video below.]

youtube

33 notes

·

View notes

Text

Enterprise and 747 in the Mate-Demate Device at Edwards Air Force Base.

"Deke Slayton set a date of June 17, but that day brought three new problems: failure of an Inertial Measurement Unit, trouble with two of the four primary flight control computers, and a fault with the ejection seats. These were fixed the following day, June 18, 1977, allowing Haise and Fullerton to board the orbiter as it rested atop its carrier. Most of Enterprise’s onboard systems were operating, including two of three APUs and ammonia boilers in an active thermal control system."

Date: June 14-17, 1977

NARA: 12042751

source

#ALT-9#Captive-active flight 1A#Captive-active flight number 1A#Approach and Landing Tests#Space Shuttle#Space Shuttle Enterprise#Enterprise#OV-101#Orbiter#NASA#Space Shuttle Program#Boeing 747 Shuttle Carrier Aircraft#Boeing 747 SCA#Boeing 747#747#Shuttle Carrier Aircraft#Mate-Demate Device#MDD#Dryden Flight Research Center#Edwards Air Force Base#California#June#1977#my post

54 notes

·

View notes

Text

Block I Apollo Guidance Computer (AGC), early Display & Keyboard (DSKY), and Inertial Measurement Unit (IMU)

Udvar Hazy Center, Chantilly, VA

11 notes

·

View notes

Text

"Dave" – Toyota Partner Robot ver. 5 Rolling Type (Trumpet), Toyota, Japan (2005). "Partner robots are expected to support people and work with people in offices, hospitals, care facilities, and homes. They need to move with legs or wheels. … The inertial force-sensing system [commonly called an inertial measurement unit (IMU)] consisted of three acceleration sensors, three angular rate sensors, and a digital signal processor (DSP). The system used automobile sensors such as acceleration and angular rate sensors and had small size, high accuracy, and low cost. … The internal force-sensing system was used by several robots at the 2005 Aichi Expo and at the 2006 Tokyo Motor Show … They were a biped-type robot playing trumpet, a biped-type robot with wire drive, a person carrier biped-type robot, [Dave] a two-wheeled rolling-type robot with inverted pendulum , and a person carrier of 2+1 wheeled rolling type called ‘i-Swing’." – Sensor Technologies for Automobiles and Robots, Yutaka Nonomura.

6 notes

·

View notes

Text

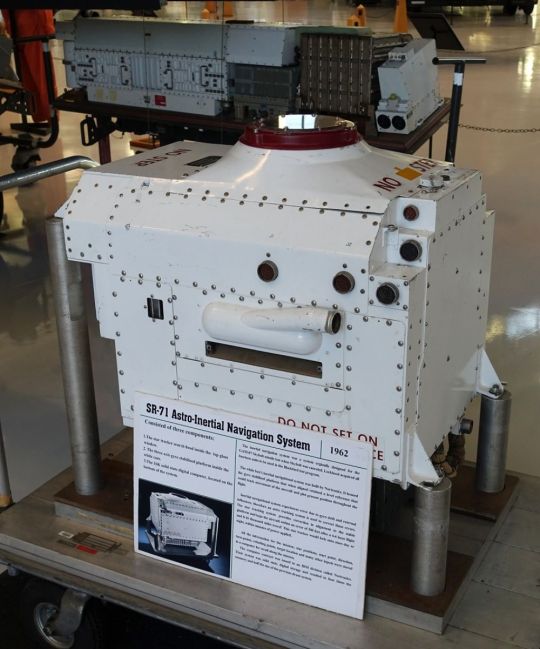

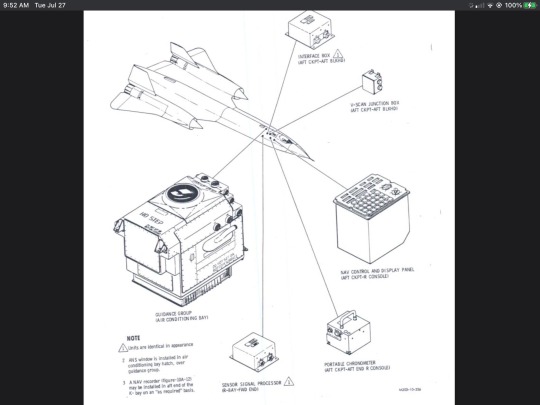

The SR-71 Blackbird Astro-Nav System (aka R2-D2) worked by tracking the stars and was so powerful that it could see the stars even in daylight

Mounted behind the SR-71 Blackbird RSO’s cockpit, this unit, (that was affectionately dubbed “R2-D2” after the Star Wars movie came out in 1977) computed navigational fixes using stars sighted through the lens in the top of the unit.

SR-71 T-Shirts

CLICK HERE to see The Aviation Geek Club contributor Linda Sheffield’s T-shirt designs! Linda has a personal relationship with the SR-71 because her father Butch Sheffield flew the Blackbird from test flight in 1965 until 1973. Butch’s Granddaughter’s Lisa Burroughs and Susan Miller are graphic designers. They designed most of the merchandise that is for sale on Threadless. A percentage of the profits go to Flight Test Museum at Edwards Air Force Base. This nonprofit charity is personal to the Sheffield family because they are raising money to house SR-71, #955. This was the first Blackbird that Butch Sheffield flew on Oct. 4, 1965.

The SR-71, unofficially known as the “Blackbird,” was a long-range, Mach 3+, strategic reconnaissance aircraft developed from the Lockheed A-12 and YF-12A aircraft.

The first flight of an SR-71 took place on Dec. 22, 1964, and the first SR-71 to enter service was delivered to the 4200th (later 9th) Strategic Reconnaissance Wing at Beale Air Force Base, Calif., in January 1966.

The Blackbird was in a different category from anything that had come before. “Everything had to be invented. Everything,” Skunk Works legendary aircraft designer Kelly Johnson recalled in an interesting article appeared on Lockheed Martin website.

Experience gained from the A-12 program convinced the US Air Force that flying the SR-71 safely required two crew members, a pilot and a Reconnaissance Systems Officer (RSO). The RSO operated with the wide array of monitoring and defensive systems installed on the airplane. This equipment included a sophisticated Electronic Counter Measures (ECM) system that could jam most acquisition and targeting radar and the Nortronics NAS-14V2 Astroinertial Navigation System (ANS).

The SR-71 Blackbird Astro-Nav System (aka R2-D2) worked by tracking the stars and was so powerful that it could see the stars even in daylight

SR-71 Astroinertial Navigation System

According to the Smithsonian Institution website, the ANS provided rapid celestial navigation fixes for the SR-71.

Mounted behind the SR-71 RSO’s cockpit, this unit (that was affectionately dubbed “R2-D2” after the Star Wars movie came out in 1977), computed navigational fixes using stars sighted through the lens in the top of the unit. These fixes were used to update the inertial navigation system and provided course guidance with an accuracy of at least 90 meters (300 feet). Some current aircraft and missile systems use improved versions as a backup to GPS.

About the ANS RSOs were known to say, “no one can jam or shoot down the sun, the moon, the planets or the stars.”

Piloting the Blackbird was an unforgiving endeavor, demanding total concentration. But pilots were giddy with their complex, adrenaline-fueled responsibilities. “At 85,000 feet and Mach 3, it was almost a religious experience,” said Air Force Colonel Jim Watkins. “Nothing had prepared me to fly that fast… My God, even now, I get goose bumps remembering.”

The SR-71 Astroinertial Navigation System, aka R2-D2, was crucial in Blackbird mission. Here’s why.

But once the SR-71 reached cruising speed and altitude, it was time to focus on the mission, which was to collect information about hostile and potentially hostile nations using cameras and sensors. The pilot’s job was to handle the aircraft and watch over the automatic systems to make sure they were doing their jobs properly. Meanwhile, the RSO handled the cameras, sensors, and the all-important ANS. The ANS was the 1960’s version of GPS, but instead of using satellites to locate itself, the ANS used the stars. This is because before the invention of the modern satnav networks there wasn’t a way to navigate the SR-71 in the areas where it operated. The SR-71 needed to be able to fix its position within 1,885 feet (575 m) and within 300 ft (91 m) of the center of its flight path while traveling at high speeds for up to ten hours in the air.

The ANS provided specific pinpoint targets located in hostile territory. It was a Gyro compass that was able to sense the rotation of the earth, while still on the runway before the SR-71 would take off. The RSO could use his coordinates of the spot ….of one place …on the runway …then read of the ANS. They were almost always exactly the same. Not always were the same stars were used on every mission, as they used the stars depending on what part of the world they were going to fly to. If flying in the southern hemisphere* they used only the stars that were seen there.

SR-71 print

This print is available in multiple sizes from AircraftProfilePrints.com – CLICK HERE TO GET YOURS. SR-71A Blackbird 61-7972 “Skunkworks”

On Jul. 2, 1967 Blackbird crew Jim Watkins and Dave Dempster flew the first international sortie in SR-71A #17972 when the ANS failed on a training mission and they accidentally flew in to Mexican airspace.

The ANS works by tracking at least two stars at a time listed in an onboard catalog, and with the aid of a chronometer, calculates a fix of the SR-71 over the ground. It was programmed before each flight and the aircraft’s primary alignment and the flight plan was recorded on a punched tape that told the aircraft where to go, when to turn, and when to turn the sensors on and off. The stars were sighted through a special quartz window (located behind the RSO cockpit) and there was a special star tracker that could see the stars even in daylight.

*It is not confirmed if the SR-71 ever flew in the southern hemisphere.

@Habubrats71 via X

15 notes

·

View notes

Text

I had begun laying out a PCB drone frame for the esp32 drone project and I was telling the University Friends about it when we checked the local hardware store and saw that this one premade PCB flight frame was in stock, so I've just bought that instead.

Designing one from scratch could be fun, but this way I can get into control logic faster and with a reliable and not horribly cobbled together platform. The board design is open source so if you want you could probably get most of it run off by JLCPCB. As best as I can tell no one has actually built a quadcopter on this platform before so hopefully that's just because no one has tried.

This is basically exactly what I was laying out anyway, just with more SMT parts. MOSFETs driving the motor pads with flyback diodes, an MPU6050 inertial measurement unit, and a basic battery management system. It also costs way less for me to just buy this, all I need now is to print some motor mounts and track down some 720 coreless motors. In the meantime l can try and bring this up and get the underlying control philosophy worked out.

I could run Ardupilot and I may well use it at some point but a) it's still very experimental on ESP32 and b) I really enjoy controls design.

15 notes

·

View notes

Text

Oh shit I got Gravity. I'm scared.

Okay, I'm assuming this works in one of two ways:

A: Object Based, i.e. I can control the strength and direction of the gravitational acceleration experienced by a specific object, with limitations on the size, range, and strength of the object.

B: Field Based, i.e. I can create a gravitational field centered on a location within range that remains fixed relative to either the Earth or me, and causes other objects to behave as if there is an enormous, compact mass in this location. Hopefully not a point mass, because creating 1 g of acceleration at 10 m from the center means 100 g at 1 meter, 10,000 g at 10 cm, 1,000,000 g at 1 cm, and 10^8 g at 1 mm. I'm scared that even if this isn't a real black hole it might be able to shred anything that gets near it and turn objects / the surrounding air into a very hot mass of compacted matter, possibly with its own accretion disc, that will put out horrifying amounts of UV radiation or worse, and violently explode as soon as the field is turned off.

Anyway: the field version has the potential to be a devastating weapon but also extremely difficult to safely use. The more entertaining version of this power is that if I can use it on myself or on a platform that I'm riding, it would theoretically be possible to put things into orbit or even interplanetary trajectories with only a spacesuit and possibly some sort of vehicle. The drawback of this is controlling it would be extremely difficult even with electronics: because anything being moved by a gravity superpower would, from its perspective, be in free fall, I don't think an Inertial Measurement Unit would be usable to measure position or speed.

It would most likely be possible in theory to make a Super Suit HUD/ Engineless Orbital Jet Ski that used GPS signals to get position and speed, but civilian GPS units have a speed limit before they refuse to give you data for reasons that should be obvious if you think about it for a few seconds, and IIRC this limit is about Mach 1.5 which is way too slow, so actually acquiring a way to put stuff onto a trajectory I want would be a "Ask the Defense Department really, really nicely for unlocked GPS hardware and pinky promise not to make an ICBM" kind of thing, as well as probably a "ask someone else who may either have superpowers or also be the Defense Department to build a navigation system in exchange for a few free launches."

In practice this is a really fucking bad idea because the National Reconnaissance Office and also probably quite a few supervillains would probably be very, very interested in someone who can put shit into orbit without the radar/heat signature of a rocket launch. If I had a superpower that could do that there is no way in hell I would let anyone know how effective it was because this is the kind of combo of not optimal for combat / very useful for other things powers that gets you blackmailed into doing shady shit / assassinated by the enemies of whoever's blackmailing you.

I'm remaining neutral, and if I used my powers publicly at all I would if at all possible lie about how they actually work and pretend I have telekinesis/flight.

You discover that you have control over a certain thing, as determined by spinning this wheel. We're talking full-on magical girl/superhero/supervillain/your label of choice control.

29K notes

·

View notes

Text

The Global Inertial Measurement Unit (IMU) Market is estimated to have reached USD 21.3 billion in 2021 and is projected to experience robust growth, reaching USD 40.7 billion by 2026.

0 notes

Text

Sensor Fusion Market Trends and Innovations to Watch in 2024 and Beyond

The global sensor fusion market is poised for substantial growth, with its valuation reaching US$ 8.6 billion in 2023 and projected to grow at a CAGR of 4.8% to reach US$ 14.3 billion by 2034. Sensor fusion refers to the process of integrating data from multiple sensors to achieve a more accurate and comprehensive understanding of a system or environment. By synthesizing data from sources such as cameras, LiDAR, radars, GPS, and accelerometers, sensor fusion enhances decision-making capabilities across applications, including automotive, consumer electronics, healthcare, and industrial systems.

Explore our report to uncover in-depth insights - https://www.transparencymarketresearch.com/sensor-fusion-market.html

Key Drivers

Rise in Adoption of ADAS and Autonomous Vehicles: The surge in demand for advanced driver assistance systems (ADAS) and autonomous vehicles is a key driver for the sensor fusion market. By combining data from cameras, radars, and LiDAR sensors, sensor fusion technology improves situational awareness, enabling safer and more efficient driving. Industry collaborations, such as Tesla’s Autopilot, highlight the transformative potential of sensor fusion in automotive applications.

Increased R&D in Consumer Electronics: Sensor fusion enables consumer electronics like smartphones, wearables, and smart home devices to deliver enhanced user experiences. Features such as motion sensing, augmented reality (AR), and interactive gaming are powered by the integration of multiple sensors, driving market demand.

Key Player Strategies

STMicroelectronics, InvenSense, NXP Semiconductors, Infineon Technologies AG, Bosch Sensortec GmbH, Analog Devices, Inc., Renesas Electronics Corporation, Amphenol Corporation, Texas Instruments Incorporated, Qualcomm Technologies, Inc., TE Connectivity, MEMSIC Semiconductor Co., Ltd., Kionix, Inc., Continental AG, and PlusAI, Inc. are the leading players in the global sensor fusion market.

In November 2022, STMicroelectronics introduced the LSM6DSV16X, a 6-axis inertial measurement unit embedding Sensor Fusion Low Power (SFLP) technology and AI capabilities.

In June 2022, Infineon Technologies launched a battery-powered Smart Alarm System, leveraging AI/ML-based sensor fusion for superior accuracy.

These innovations aim to address complex data integration challenges while enhancing performance and efficiency.

Regional Analysis

Asia Pacific emerged as a leading region in the sensor fusion market in 2023, driven by the presence of key automotive manufacturers, rising adoption of ADAS, and advancements in sensor technologies. Increased demand for smartphones, coupled with the integration of AI algorithms and edge computing capabilities, is further fueling growth in the region.

Other significant regions include North America and Europe, where ongoing advancements in autonomous vehicles and consumer electronics are driving market expansion.

Market Segmentation

By Offering: Hardware and Software

By Technology: MEMS and Non-MEMS

By Algorithm: Kalman Filter, Bayesian Filter, Central Limit Theorem, Convolutional Neural Network

By Application: Surveillance Systems, Inertial Navigation Systems, Autonomous Systems, and Others

By End-use Industry: Consumer Electronics, Automotive, Home Automation, Healthcare, Industrial, and Others

Contact:

Transparency Market Research Inc.

CORPORATE HEADQUARTER DOWNTOWN,

1000 N. West Street,

Suite 1200, Wilmington, Delaware 19801 USA

Tel: +1-518-618-1030

USA - Canada Toll Free: 866-552-3453

Website: https://www.transparencymarketresearch.com Email: [email protected]

0 notes

Text

Oooooooh!

This is excellent!

I am thinking a inertial measurement unit like the 6050s I have lying around could do something similar with the skull!

I bet I could detect pettsies!

Maybe... maybe 2 of them so I can detect if the wave is coming from the top or bottom of the skull?

testing and debating if i should add an expression change on tap feature

10% of the time it randomises

3K notes

·

View notes